Introduction

In this guide, you will learn how to deploy a NextJS Node server on AWS ECS Fargate. This is an easy and scalable way to deploy a Dockerised NextJS project. It also makes it easy to add more environments and keep a clean separation between them while making changes to them all through a git repository (no dashboard fiddling!). This is my personal approach to deploying new projects

If you’re reading this, then you’ve probably already got your own reason to want to deploy NextJS on AWS (with Terraform). If not, here are some good ones:

- No Github account restrictions - no matter if you are deploying from a org repo or a private, AWS treats it all the same (unlike Vercel).

- Easily manage sensitive environment variables with Github secrets and spin up multiple separate environments.

- Use node in-memory cache straight out of the box. Your server will have a persistent state (unlike Lambda!) which means database connections are also no problem.

- Infrastructure as code - clone the sample repo, add your own AWS keys and push to Github. Simple as that!

- Use up some of those AWS credits you have lying around and collecting dust.

You will learn how to get started right away, and then the steps after, I will try to explain what is going on.

Quickstart

I will cut to the business and show you how to get up and running as quickly as possible. It will be a simple process and you will not have to touch the AWS console… Much!

- Get your AWS keys — Get hold of your

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEYcredentials from your AWS account. Store these in a safe place, you will need them later. - Create an S3 bucket — This will be needed for your remote Terraform state. Leave everything as default and give it a unique name like “[your name]-terraform-state”. Remember this name!

- Clone my repo (make sure to give it a star first ⭐️) and push a private copy to your own Github account.

- In the cloned repo, edit the backend.tf file, change

bucket = "YOUR_BUCKET_NAME”to the value you created earlier. Commit and push. - In the settings tab for your newly-published repository, click on Secrets and variables > Actions. Add the following secrets secrets:

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY(the ones from step 1). - You are now ready to deploy — In the Actions tab of your repo, you should see an action called “Deploy Prod” on the sidebar. Click the action and then press run workflow. Wait for it to complete and your app should now be deployed! But where is it?

- You can find the temporary URL that hosts your site by going onto your AWS dashboard > EC2 > Load balancers > (your load balancer). Where it says DNS Name, that is your app’s live url. We will talk later about routing this through a domain with a DNS of your choice (like Cloudflare).

Architecture

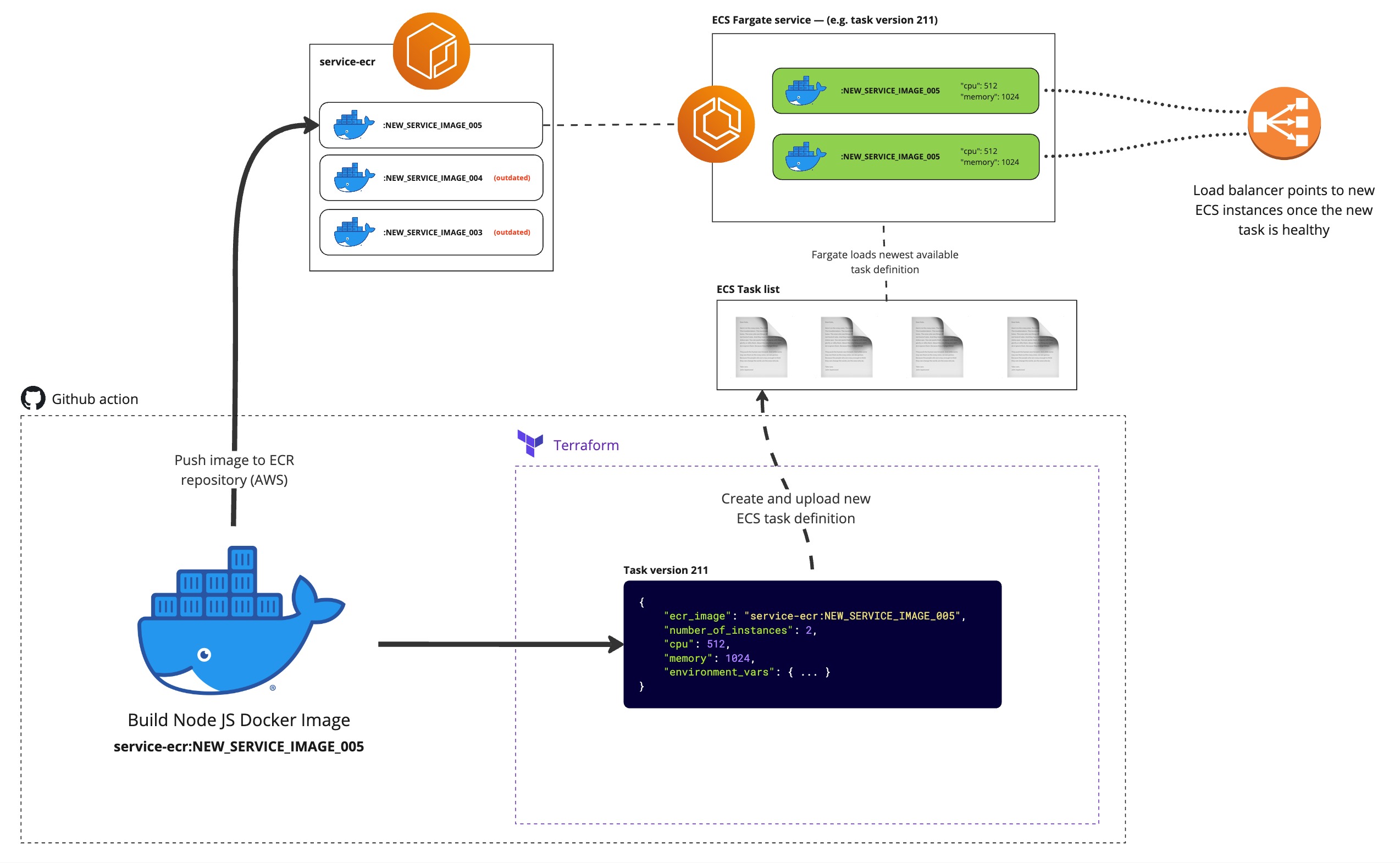

So you’ve just deployed the app, but what is actually going on? If you read through the Github workflow, you can get a rough idea, but here is a diagram to show in slightly more detail.

Firstly, an ECR repository is provisioned with terraform (ecr.tf). An ECR repo is just a directory which holds tagged docker images. The Docker image is then built and pushed to the ECR for later use. Afterwards, the rest of the terraform infrastructure if provisioned (load balancer, ECS, security groups, etc) and a new ECS task is created, which references the just-uploaded Docker image. The ECS the spins up the docker image as a Fargate task with the given parameters (and environment variables) specified in the task definition.

Terraform and multiple environments

Looking in the terraform directory, you will see that there are two subdirectories: module and prod. The prod directory is the entry-point of the Terraform. If you look in main.tf, you will see that the main sources the module dir while injecting a series of custom variables. The idea is that if you copy and paste the prod directory as dev (for example) and find and replace all instances of prod in that dir to dev, you will be able to spin up a second environment which can source the terraform module in the same way!

Runtime environment variables are injected into the task definition (task.tf) and are loaded in on the terraform plan workflow step of the Github action. You can make any variable visible to Terraform by adding a TF_VAR_ prefix. Terraform will ignore the TF_VAR_ prefix and interpret the rest as a variable. All variables loaded from actions need to be defined in prod/variables.tf and all variables referenced in terraform/module need to be injected by main.tf and also defined in module/variables.tf.

Using a custom domain

Hosting your app from an AWS-generated, non-HTTPS load balancer url is not ideal. If you want to use a custom domain, you can add that domain to Cloudflare, and then create a cname DNS record which points to this load balancer url. You should now be able to access your app from a HTTPS connection on your custom domain. To prevent internet access from the original load balancer domain, you can blacklist all IP addresses, apart from IP ranges owned by Cloudflare. This ensures that the only route to your site is through Cloudflare’s network.

Adding a Postgresql database

The great thing about Terraform is that when you want to add new infra, you don’t have to lay a finger on the AWS dashboard. We can spin up an RDS database and load the DATABASE_URL straight into our app, just by changing a few lines.

To add the database, search in the whole repo for the words “UNCOMMENT FOR DATABASE” and you will see indicated lines to uncomment. After doing this, a postgres database should spin up on the next deployment.

If you want to pass the environment variable DATABASE_URL into your app, add the following lines to the environment array in your ECS task definition.

// task.tf

{

"name": "DATABASE_URL",

"value": "postgresql://${var.db_username}:${var.db_password}@${aws_db_instance.db.endpoint}"

}

// ...

WARNING: This RDS instance will be an all-open access. In a production environment, you will not want to expose your database to the internet and instead hide it behind a VPC or narrowly limit the IP addresses which can connect to it.

Summary

Hopefully this has been a useful guide to get you up and running with Terraform and AWS Fargate. Terraform can be a little bit of a learning curve, but once you understand how it executes and how it manages state, it becomes an valuable and flexible tool. If you have any specific questions or feedback about this article, you can get in touch with me via oscars.dev and in case you missed it before, here is a link to my repo to get started with NextJS, Fargate and Terraform. Enjoy! https://github.com/OZCAP/nextjs-fargate-terraform

Further reading

A better practice for handling AWS authentication in Github actions is to use AWS IAM credentials instead of pure access keys. This allows you to be a lot more granular with how permissions are allocated. Alvaro Nicoli has written an article which is a good continuation from this article, should you wish to go down this route — read the article here.